In the fast-evolving landscape of computing, edge computing has emerged as a pivotal player, promising to revolutionize the way we process, analyze data and make mission critical decisions.

As organizations increasingly seek efficient and responsive solutions, the exploration of diverse edge deployment architectures and their benefits and limitations becomes crucial.

In this article we will unveil various deployment architectures which are being adopted in the Industry and also shed light on some of the practical applications that are being build on top of these deployment architectures.

Edge Computing: A Brief Overview

Edge computing is a paradigm shift that brings computation, data storage and critical decision making closer to the data source, minimizing latency and optimizing bandwidth usage.

Unlike traditional cloud computing models that centralize processing in remote servers, edge computing decentralizes it, fostering a more efficient and responsive ecosystem.

As organizations increasingly demand real-time insights, ultra-low latency, and resilient distributed systems, Edge Computing is becoming mission-critical.

To help professionals move confidently from concepts to execution, our course “Edge Computing Essentials: Concepts to Real-World Use Cases” provides a structured, hands-on learning path covering core principles, architecture, platforms, and enabling technologies—directly mapped to real-world industrial use cases.

If you’re looking to build in-demand skills and accelerate your career in Edge Computing, this is the right place to start.

Edge Deployment Approaches being adopted in Industry

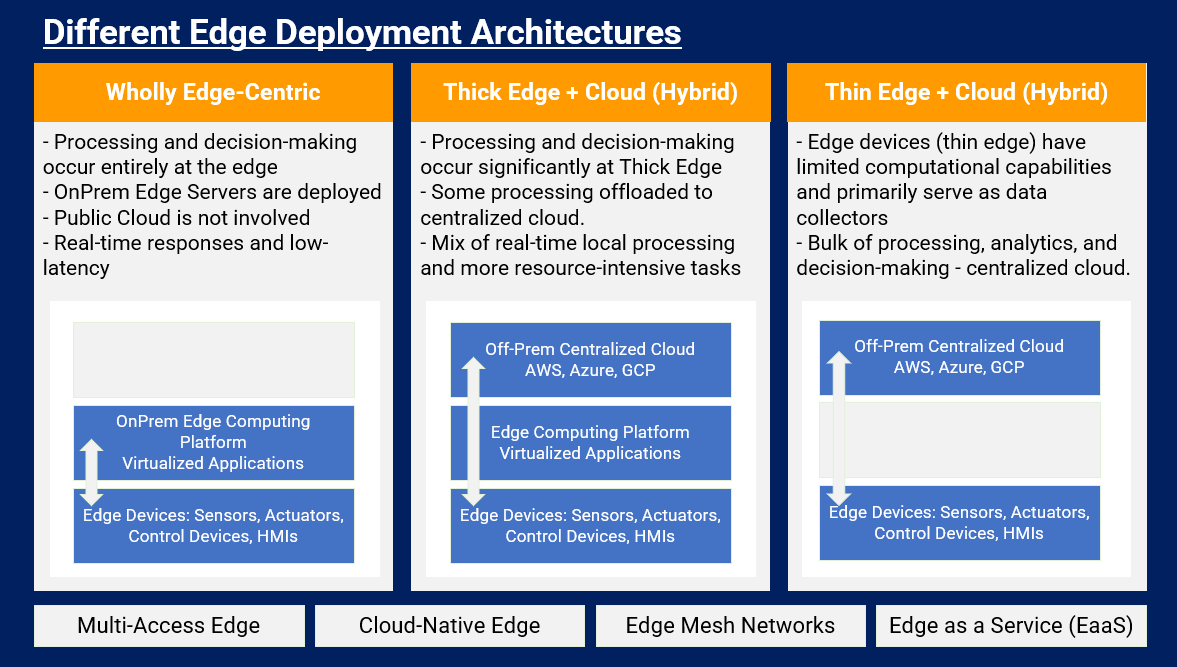

Following are three major Edge Deployment Approaches being adopted in Industry as of 2024 and beyond:

Wholly Edge-Centric Deployments

In a wholly edge-centric deployment, all computing resources are localized at the edge of the network, enabling immediate data processing, and action taking without reliance on centralized cloud servers. This approach is ideal for scenarios where low latency is critical, and real-time decision-making is paramount.

Benefits of Wholly Edge-Centric Deployments

- Low Latency: Immediate processing at the edge reduces communication delays.

- Autonomy: Independence from centralized servers enhances reliability and security.

- Scalability: Easily scalable by adding more edge devices as needed.

Limitations of Wholly Edge-Centric Deployments

- Limited Processing Power: May be constrained by the computational capacity of edge devices, unless full fledges on-Prem data centers are deployed.

- Resource Intensity: Resource-intensive applications may face challenges in wholly edge-centric environments.

Use Cases and Examples of Wholly Edge-Centric Deployments

- Industrial IoT: Wholly edge-centric deployments excel in industries like manufacturing, where immediate feedback and control are crucial for optimizing production processes.

- Autonomous Vehicles: Real-time decision-making for autonomous vehicles relies on quick data processing at the edge.

Thick Edge with Cloud (Hybrid) Deployment

In a thick edge with cloud deployment, substantial processing occurs at the edge, but more resource-intensive tasks, like analytics and long-term data storage, are offloaded to the cloud.

This hybrid model balances the advantages of both edge and cloud computing, offering versatility in various applications.

Benefits of Thick Edge with Cloud Deployment

- Balanced Performance: Combines the low-latency benefits of edge computing with the robust computational power of the cloud.

- Flexibility: Adaptable to dynamic workloads and varying computational requirements.

- Cost Efficiency: Optimizes resource usage, potentially reducing overall infrastructure costs.

Limitations of Thick Edge with Cloud Deployment

- Latency Trade-off: While lower than a purely cloud-based solution, latency is higher than wholly edge-centric deployments.

- Complexity: Managing the synchronization between edge and cloud resources can be intricate.

Use Cases and Examples of Thick Edge with Cloud Deployment

- Smart Cities: Thick edge with cloud is effective for smart city applications, where localized processing is essential, but analytics and long-term data storage may occur in the cloud.

- Augmented Reality (AR): AR applications benefit from the immediate processing of edge computing and the cloud's ability to handle complex data rendering.

As organizations increasingly demand real-time insights, ultra-low latency, and resilient distributed systems, Edge Computing is becoming mission-critical.

To help professionals move confidently from concepts to execution, our course “Edge Computing Essentials: Concepts to Real-World Use Cases” provides a structured, hands-on learning path covering core principles, architecture, platforms, and enabling technologies—directly mapped to real-world industrial use cases.

If you’re looking to build in-demand skills and accelerate your career in Edge Computing, this is the right place to start.

Thin Edge with Cloud (Hybrid) Deployment:

In a thin edge with cloud hybrid deployment, the focus is on edge processing, but the cloud plays a more substantial role compared to the thick edge model.

This approach is suitable for applications where latency is not as critical, and periodic data analysis can be offloaded to the cloud.

Benefits of Thin Edge with Cloud Deployment

- Optimized Resource Usage: Strikes a balance between edge and cloud resources, optimizing resource consumption.

- Adaptability: Well-suited for applications with varying computational demands over time.

- Cost-Effective: Potentially reduces infrastructure costs compared to a wholly cloud-centric approach.

Limitations of Thin Edge with Cloud Deployment

- Moderate Latency: Offers lower latency than a purely cloud-based solution but may not meet the demands of highly time-sensitive applications.

- Dependency on Cloud: Relies on cloud resources for tasks beyond the edge's capacity.

Use Cases and Examples of Thin Edge with Cloud Deployment

- Remote Monitoring Systems: Thin edge with cloud deployments are effective in scenarios where periodic data analysis from remote sensors is necessary, but immediate response times are not critical.

- Environmental Monitoring: Applications involving periodic analysis of large datasets, such as environmental monitoring, can benefit from the computational power of the cloud while leveraging edge devices for real-time data collection.

Other Edge Deployment Approaches

Besides the three main strategies described above, there are more edge deployment approaches each with its unique strengths and applications:

Multi-Access Edge

Tailored for 5G networks, multi-access edge computing optimizes application performance by deploying resources at the network edge, enhancing the capabilities of latency-sensitive applications.

Cloud-Native Edge

This approach leverages cloud-native principles for edge deployments, ensuring seamless scalability, agility, and ease of management. It is particularly beneficial for dynamic workloads and applications.

Edge Mesh Networks

An innovative approach where edge devices collaborate, forming a mesh network. This enhances reliability and performance, making it suitable for scenarios like collaborative robotics and distributed sensor networks.

Edge-as-a-Service

Providing a scalable and on-demand model for edge resources, Edge-as-a-Service offers flexibility and cost-effectiveness. It allows organizations to scale their edge infrastructure based on evolving needs.

Practical Applications of Edge Deployment

- Healthcare: Real-time patient monitoring and instant data analysis at the edge for timely decision-making.

- Manufacturing: Integration of edge computing in industrial IoT for predictive maintenance and optimal production processes.

- Smart Cities: Leveraging edge technologies like fog computing for efficient traffic management and intelligent surveillance systems.

- Retail: Enhancing customer experiences through personalized, location-based services powered by edge computing.

- Telecommunications: Improving network performance and enabling low-latency applications in 5G networks through multi-access edge computing.

Conclusion

In conclusion, it is evident that the future of computing lies both at Cloud and at the Edge. Each approach and strategy offers a unique set of advantages, catering to the diverse needs of industries.

Organizations must carefully consider these deployment architectures, aligning them with their requirements to unlock the full potential of edge computing.

As we continue to unveil the practical applications of these architectures, the journey towards a more responsive, efficient, and connected future accelerates.

The edge is not just a destination; it's a dynamic frontier where innovation thrives.

As organizations increasingly demand real-time insights, ultra-low latency, and resilient distributed systems, Edge Computing is becoming mission-critical.

To help professionals move confidently from concepts to execution, our course “Edge Computing Essentials: Concepts to Real-World Use Cases” provides a structured, hands-on learning path covering core principles, architecture, platforms, and enabling technologies—directly mapped to real-world industrial use cases.

If you’re looking to build in-demand skills and accelerate your career in Edge Computing, this is the right place to start.